I have tried to stay with one concurrent theme with my blogs. I have been writing about the best way to use SQL Server Default tools. Not to say I don’t believe or trust in third party vendor tools to help analyze your data. I think those tools hold good value and can help save money and time. I believe in using what yo’mamma gave you. As I have mentioned before this will ultimately save your department money while still giving you the tools needed to exceed yearly expectations.

I have titled this blog This Ain’t your Mamma’s Tuning because the Database Engine Tuning Advisor (DTA) is not what is used to be. DTA is a sophisticated tool that will help you optimize your database by providing useful information. Then when you combine DTA with SQL Profiler, oh my gosh do you have the power of Grayskull at your finger tips. (Showing my age a little, and my nerd-ness.) As I have blogged about in the past you have the ability to use Profiler and run several different kinds of traces, now lets talk about how we can load those traces into DTA and then analyze our findings. But first, lets review the features of DTA.

DTA Can:

- Consider trade offs of physical design structures like clustered/non clustered indexes, views and even partitions. T-SQL commands are also reviewed; such as selects, inserts, updates, and deletes when selecting your troubled index combinations. This means that the overhead with your T-SQL commands are analyzed as part of the recommended indexing scheme. It will also look at trade offs in which columns are arranged.

- Determine which physical structures are not needed in a database. It will find the indexes that are never used.

- Look at the ‘What-if’s’. You can see if a particular index should be clustered or not to better optimize your performance.

- Analyze single or batches of statements in a SQL Profiler trace which can be stored in native format, or in a table.

- Work with statements that have UDF’s, triggers, or temp tables.

DTA produces several reports allowing you to really break down how your database is working on the inside. Basically DTA can help optimize your databases physical structures by looking at statements found at any level on the server.

Now the LumberJack Can:

- Take the overhead of the trace and push it to a test server, even if the test server is not a perfect mirror of production.

- Use either the GUI interface or a command prompt. The CMD can help use less overhead.

- Choose to tune physical structures for one or several databases at the same time.

- Choose how long to run a trace by setting a trace time. This is especially useful if you need a quick results on a statement that usually takes a long time to run. Basically your only looking at a chuck of the statement.

- Save the analyses to review them at any time. This will help you compare results at different time periods.

Go Big or Go Home!

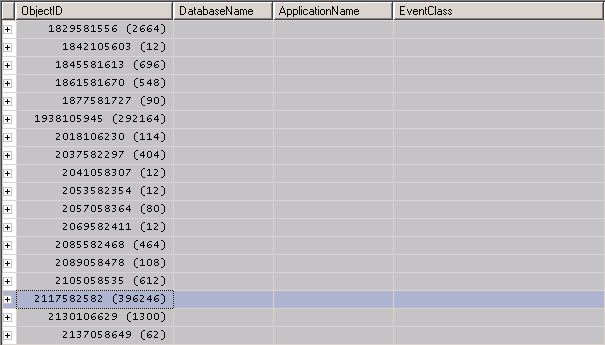

Not Really, actually in this case it’s probably best to ‘go little and get home’. DTA is only going to analyze three events so don’t use more then needed. In fact in most cases your trying to analyze performance issues so again, why put more on the server when your trying to eliminate that very thing. So Lumberjacks, you have two choices. You can run a low overhead trace or load up a low overhead workload file. I prefer to load up a low overhead workload file.

Low Overhead Trace

We need to keep in mind that the most powerful way to use this tool is to provide a workload file. The file should be from a trace that contains activity during high usage hours. As we discussed earlier lets keep these traces light weight. SQL Profiler offers a low impact template to address this very situation. Don’t worry about arranging columns or filtering columns in a low overhead trace. Save your trace to a file and not a table. Those little things can add overhead to the server. The Tuning template will capture what we need.

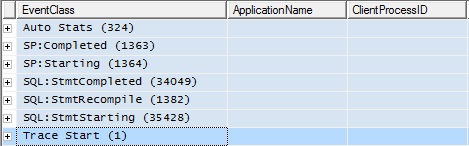

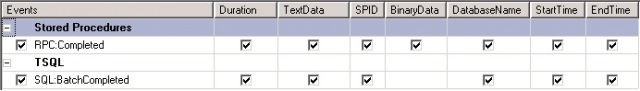

- Stored Procedures

- RPC: Completed

- SP:StmtCompleted

- TSQL

- SQL:BatchCompleted

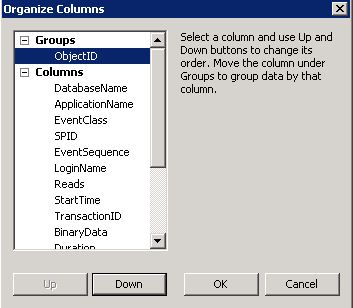

It also includes the seven data columns that will provide DTA more than enough. As you can see this is a lightweight trace. However, if you wanted to remove any of the events to make the trace even lighter, which on a overloaded production server is not the worst idea. You can create the trace from scratch and remove some columns. If you make it yours and it’s not compatible with DTA you will get an error. These are the columns that I put in any DTA trace, any others are of no use to DTA.

- TextData: This will find what code is running in each statement

- Duration: Not technically ‘required’ but it tells you which statements are taking a long time to run.

- SPID: This is required when your gathering data for the profiler trace. DTA can use the SPID to identify which database the event took place.

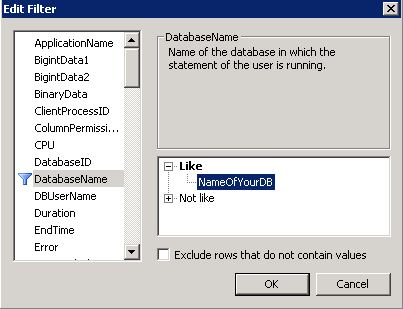

- DatabaseName: This is another one that is not required but lets be realistic you need to see which database your looking at.

- LoginName: Not requied but again, it’s good to know who is running these statements. Could be a user or it could just be the application login.

On an overloaded production server the trace file will grow quickly. Be sure to keep an eye on the trace file and your allocated space. Again, run the trace during high usage to gather as much as you can in little time. DO NOT STOP the trace, use the pause button. You can always use the pause button if people start complaining of performance issues. It’s better to run the DTA on a single file instead of multiple little ones. If you stop it and have to create multiple files you can load them into a table later. However, it’s a pain to set up DTA to analyze all that. Just don’t stop it!

Kick the Tires and Light the Fires!

DTA can be launch from either SSMS or Profiler. You will need to log into the server that includes the database you want to analyze. Also note that your login must have sysadmin and dbo_owner.

Working from a Workload file:

Again you can analyze directly from DTA but in this blog we’re looking at running DTA from our previous trace. We are also looking at one database and it’s indexes.

Once DTA starts:

- Name your Session

- Choose the workload file you just created by click on the ‘Browse for Workload file’ icon

- Select the Database for workload analysis

- Choose the same database in the ‘Select databases and tables to tune.

- Click on the Tuning Options Tab

- Choose Indexes (This is the default option)

- Choose Full Partitioning

- Choose Keep all existing PDS

Click Start Analysis!

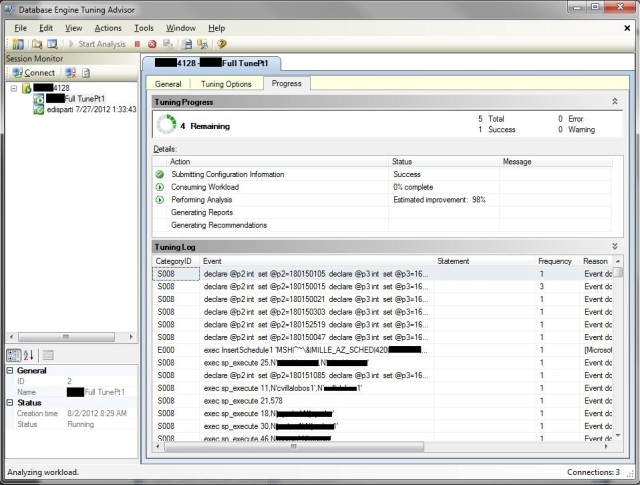

Once you click ‘Start Analysis’ a Progress tab will open. You should see a Tuning Progress window.

This process can take minutes or hours depending on your workload file and how long you ran your trace. When it’s complete it will open to the Recommendations Tab. You will see Partition Recommendations and Index Recommendations. It will also show you the percentage improvement in performance if you implement all the recommendations. The actual percentage of what you get, if you implemented all the recommendations is most likely less due to the workload file. Unless you ran your trace all day, and your DTA took very very long to analyze that percentage is not entirely accurate, but you will still see improvement.

The Reports Tab will show you the ‘Tuning Summary’ details. This will show you how many statements can be tuned, how many index recommendations are suggested, etc. You also have the option of extra reports. At the bottom of the reports tab you will see the ‘Tuning Reports’ section. Expand the drop down and view the different reports. I like to use these when sending recommendations to vendors. Always choose both the ‘current’ and ‘recommended’ reports for comparisons.

Do I Accept all recommendations?

No, no you don’t. This is where YOU can shine. A lazy and very optimistic DBA will accept all recommendations. However, my fellow lumberjacks you need to review the recommendations and save the results. I’m not saying I’m not confident in DTA, I’m saying be smart. Look at this trace and DTA as a baseline. Click Actions > Save Recommendations.

Go re-run your trace, maybe at a differnt time of day and see what DTA recommends. If you find DTA is making the same recommendations it’s a safe to say it’s a good recommendation and go ahead and implement that change.

If for instance it’s a index creation you can do it manually or let DTA implement automatically. Personally, I like to do these manually and document everything I do. Am I Union? Anyway… If you want to allow DTA to implement them automatically just make sure the check box is checked next to the each recommendation. Since applying these recommendations is likely to slow down the server, possibly even dropping an index and creating a new one in it’s place; you want to schedule these to run during a scheduled outage/upgrade. If you have been in the IT world long enough you know exactly what I’m talking about. When you click Actions > Apply Recommendations you will get a ‘Apply Recommendations’ window where you have the option to ‘Schedule for later’. Since I like to do these manually I like to click the definition link and find the raw T-SQL Code. I will then copy that to the clipboard and run each on in SSMS. I will always recommend running these in a test environment prior to running these in production. When you run these recommendations either manually or automatically in a test environment you will get an fair idea of how long your outage will be and if the recommendations are successful. Please Please always test first.

Good Job!

As I said ‘This Ain’t your Mamma’s Tuning’. DTA is an amazing tool that every DBA should play with before using third party tools. If you running DTA on a vendor supported database and send those recommendations to the vendor they “should” be more open to those recommendations over a non-Microsoft tool. If your department has the means or already have third party tools…by all means use both. For now, DTA is awesome and you already have it in your tool shed. Don’t go asking for a sharper ax when you already have a pretty nice one.